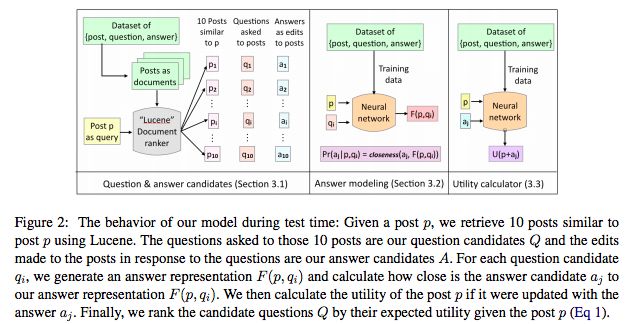

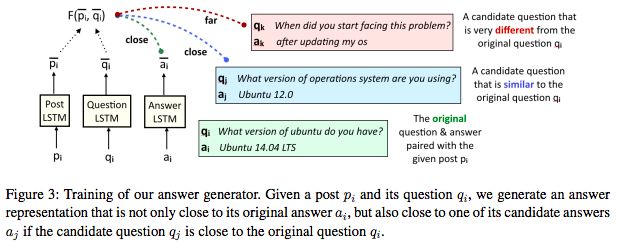

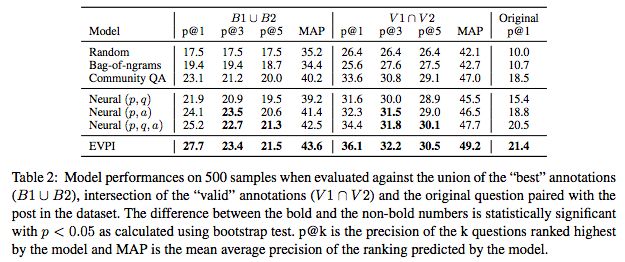

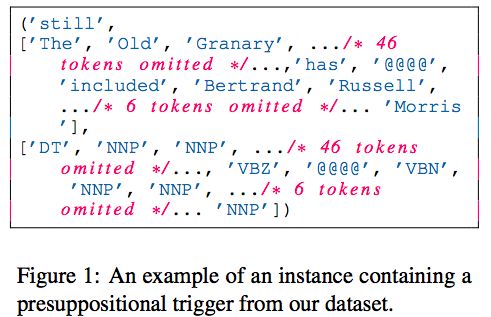

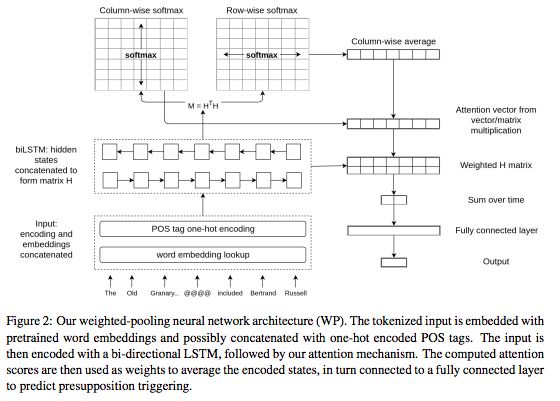

The ACL Conference is the premier conference in the field of computational linguistics and covers a wide range of natural language calculation methods and various research areas. The ACL 2018 will be held in Melbourne, Australia from July 15th to July 20th. Yesterday, the ACL official website published the best papers for this conference, including 3 best long papers and 2 best short papers. The Association for Computational Linguistics (ACL) is one of the most influential and dynamic international academic organizations in the world. Its members are all over the world. The ACL Conference is the premier conference in the field of computational linguistics and covers a wide range of natural language calculation methods and various research areas. The 56th annual meeting of the Association for Computational Linguistics, ACL 2018, will be held from July 15 to July 20 at the Melbourne Convention and Exhibition Centre in Melbourne, Australia. Yesterday, ACL official website published the best papers of this conference, including 3 long papers and 2 short papers. Best Long Papers Finding syntax in human encephalography with beam search. John Hale, Chris Dyer, Adhiguna Kuncoro and Jonathan Brennan. Learning to Ask Good Questions: Ranking Clarification Questions using Neural Expected Value of Perfect Information. Sudha Rao and Hal Daumé III. Let's do it “againâ€: A First Computational Approach to Detecting Adverbial Presupposition Triggers. Andre Cianflone, Yulan Feng, Jad Kabbara and Jackie Chi Kit Cheung. Best Short Papers Know What You Don't Know: Unanswerable Questions for SQuAD. Pranav Rajpurkar, Robin Jia and Percy Liang 'Lighter' Can Still Be Dark: Modeling Comparative Color Descriptions. Olivia Winn and Smaranda Muresan According to the official website of ACL, this year's conference was highly competitive: a total of 258 out of 1018 long papers and 126 out of 526 short papers were accepted, and the overall acceptance rate was 24.9%. Best Papers - Long Papers (3) 1. Finding syntax in human encephalography with beam search. (Use beam search to find grammar in human EEG) Author: John Hale, Chris Dyer, Adhiguna Kuncoro & Jonathan Brennan. (The content of the paper has not been announced yet) 2. Learning to Ask Good Questions: Ranking Clarification Questions using Neural Expected Value of Perfect Information. (Learning to ask a good question: The use of perfect information has a mental expectation to sort out clarification questions.) Author: Sudha Rao & Hal Daumé III Paper address: https://arxiv.org/pdf/1805.04655.pdf Abstract of thesis Asking (inquiry) is the basis of communication. Unless problems can be raised, machines cannot effectively cooperate with humans. In this work, we have established a neural network model to sort clarification issues. Our model is inspired by the expected value of perfect information: A question is whether the expected answer is useful or not. We use data from StackExchange to study this issue. StackExchange is a rich online resource where people ask some clarifying questions in order to better support the original post. We create a data set of about 77k posts, each of which contains a question and answer. We evaluated our model on a 500-sample dataset and compared it with the human expert's judgments, demonstrating that the model achieved significant improvements on the control baseline. The behavior of the model during testing Answer generator training Model performance tested on 500 sample datasets 3. Let's do it “againâ€: A First Computational Approach to Detecting Adverbial Presupposition Triggers. Author: Andre Cianflone, Yulan Feng, Jad Kabbara and Jackie Chi Kit Cheung. Paper address: https://~jkabba/acl2018paper.pdf Abstract of thesis In this paper, we propose a task for detecting predicative triggers, such as "also" or "again". Resolving tasks requires the detection of duplicate or similar events in the context of discourse, which can be applied to tasks in natural language generation tasks such as summaries and dialogue systems. We created two new datasets for this task. The data comes from two corpora of Penn Treebank and Annotated English Gigaword, as well as a new attention mechanism tailored to this task. Our attention mechanism adds a baseline recursive neural network and does not require additional training parameters, thereby minimizing computational costs. Our work proves that our model is statistically superior to some baseline models, including LSTM-based language models. A sample containing a preset trigger in our dataset Weighted-pooling neural network architecture Best Papers - Short Papers (2) 1. Know What You Don't Know: Unanswerable Questions for SQuAD. Author: Pranav Rajpurkar, Robin Jia and Percy Liang (Content of the paper is not published) 2. 'Lighter' Can Still Be Dark: Modeling Comparative Color Descriptions. Author: Olivia Winn and Smaranda Muresan (Content of the paper is not published) This year's ACL opened a "meta conference" session to discuss double blind review and ArXiv preprint related topics. Many studies have shown that when the objective value of work remains unchanged, single-blind review will lead the reviewer to be more biased toward certain types of researchers. Therefore, all ACL meetings and most seminars use a double-blind review system. The prevalence of on-line preprint servers represented by ArXiv threatened the double-blind review process to some extent. Last week, ACL updated the submission, review, and quotation policies for its conference papers. It stipulates that, in order to be effective in double-blind review, submission of dissertation papers is prohibited from being published on pre-printed platforms such as arXiv within one month prior to the deadline. These new requirements have raised some voices that question the effectiveness of double-blind reviews, but most researchers have expressed support for the New Deal. Therefore, several other best papers may need to wait until after the July meeting.

Heat shrinkable tube is a special heat shrinkable tube made of polyolefin.

The outer layer is made of high quality soft crosslinked polyolefin material and the inner layer of hot melt adhesive.

The outer layer has the characteristics of insulation, corrosion resistance and wear resistance, and the inner layer has the advantages of low melting point, waterproof sealing and high adhesion.

Low Voltage Heat Shrinkable Adhesive Tape has excellent flame retardancy, environmental protection, insulation, flexibility, stable performance, low shrinkage temperature and fast shrinkage.

We are the professional manufacturer of Electrical Tapes ,Insulating Tape and Special Adhesive in China for more than 25 years,if you want to know more information about our company and products, please visit our website.

We are the professional manufacturer of Rigid Heat Shrink Tubing(Rigid Heat Shrink Tubing) in China for more than 25 years,if you want to know more information about our company and products, please visit our website.

Composite Heat Shrink Tubing,Shrink Tape For Carbon Fiber,Shrink Tube For Wires,Waterproof Heat ShrinkBusbar Heat Shrink Tubing,Busbar Heat Shrink Sleeve,Busbar Heat Shrink,Heat Shrink Busbar Insulation CAS Applied Chemistry Materials Co.,Ltd. , https://www.casac1997.com